MAY NOT BE REPRODUCED WITHOUT PERMISSION

Table of Contents

- AWS Basics

- AWS Cost Reduction

- Optimizing ECS and EC2

- Kubernetes (EKS) Optimization

- Big Data Optimization (EMR)

- Optimizing AWS with Granulate

- About Granulate, an Intel Company

The volume and frequency of cyberattacks targeting government resources and critical infrastructure continue to escalate at an alarming rate. Governments worldwide face an evolving and increasingly sophisticated threat landscape, making cybersecurity a top priority.

Executive Summary

The cloud infrastructure market is now valued at $120 billion and continues to grow at a rate of nearly 30% per year. [1] Dominated by two players, Amazon Web Services (AWS) and Microsoft Azure, AWS accounts for nearly 40% of the total IaaS marketplace and generates nearly twice the revenue of Azure. [2]

The growing suite of AWS compute products, including EC2, ECS, Lambda, and Elastic Beanstalk enable customers to run more applications and workloads in the cloud for a wide range of use cases, but this often leads to significant — and unnecessary overspending.

Frequent overprovisioning of resources and a lack of visibility are common.94% of enterprises report overspending on cloud resources. [3] As much as a third of all cloud spending is wasted. [4]

Optimization is crucial for cost management amid growing complexity and usage. Rightsizing workloads and reducing unnecessary spend is crucial to manage spending while maintaining the resources you need.

In this guide, we will explore strategies to control your costs and optimize your AWS resources.

AWS Basics

AWS optimization is an ongoing process that requires continuous evaluation and fine-tuning to ensure optimal performance, reliability, and environmental sustainability.

Performance

Improving speed, scalability, and throughput

- Choosing appropriate instance types and sizes

- Implementing load balancing

- Optimizing storage and network configurations

- Implementing frequent caching

Reliability

Ensuring high availability and fault tolerance

- Leveraging multi-zone architectures

- Enabling automated recovery and backups

- Using auto-scaling groups

- Monitoring and responding to alerts and events

Environmental Sustainability

Reducing unneeded resource consumption and carbon footprints

- Consolidating workloads on optimized instances

- Scheduling instances to shut down when not in use

- Deleting unused resources

- Auto-scaling to the right size workload demands

AWS Cost Reduction

AWS features more than 200 services on its platform. [5] Choosing the right ones and managing resources effectively is a challenge.

Pricing is driven by three main drivers:

- Storage

- Compute resources

- Data transfer

Costs vary depending on the product and pricing model you choose.

While AWS doesn’t charge for inbound data transfers or service transfers within the same AWS region, outbound data transfers can lead to significant costs without continuous management. Disorganized use of regions or failure to optimize your infrastructure can create hefty egress fees that add up to an average of 6% of total storage costs. [6]

Seven Strategies for Reducing AWS Costs

- Choose the Right AWS Region

Choosing the right region is more than just selecting the closest region to your location. While choosing more distant regions may introduce additional latency, there may be significant cost savings by leveraging different regions where latency is not as important. Keep in mind that not all services are available in all regions.

- Get Unified Billing for all Accounts

If you have multiple accounts, consolidating them into one invoice can help monitor your spending holistically. - Choose the Right Pricing Models

You can optimize your AWS accounts by choosing the most cost-efficient model for different types of workloads:

– On-demand instances: Flexible to start and stop at any time, but has a higher cost structure.

– Reserved instances: Can reduce costs by up to 70% vs. on-demand EC2 or RDS capacity in exchange for an annual or multi-year commitment.

– Spot instances: Spot instances can reduce costs by 90% off on-demand prices without a commitment. Keep in mind that AWS provides these discounts for unused capacity, but can also claw them back whenever they need them.

– AWS savings plans: Savings plans can reduce Fargate, Lamba, and EC2 costs with a flexible pricing model. - Turn off Unused Instances

Make turning off unused instances part of your standard operating procedures, especially at the end of workdays and weekends or holidays. You can also implement scheduled on/off times for non-production instances. - Right Size Low Utilization Instances

The AWS Cost Explorer Resource Optimization Report can help you identify idle or underutilized instances and the AWS Compute Optimizer to provide recommendations across instance families. [7] - Delete Idle Load Balancers

Idle loads are still being billed even without much usage. Identifying any load balancers in your AWS account that have little traffic going through them can also reduce costs. - Monitoring Storage Use

There are multiple storage tiers with S3 storage tiers with different costs. You can reduce cost by moving data you access infrequently into lower-cost tiers instead of paying for more expensive standard storage. You can also implement lifecycle policies to automatically move less-used objects to Infrequent Access (IA) storage after a specified period.

Optimizing ECS and EC2

Amazon Elastic Compute Cloud Auto Scaling helps ensure the right number of EC2 instances are available for application loads. You can create auto-scaling groups, specifying the number of instances you want in the group and the auto-scaler will make sure you have enough instances.

You can set minimums and maximums, ensuring your groups stay within the limits you set, but make it easy to add new instances as demand increases or terminate them when they are no longer needed. This reduces expenses automatically. You pay for actual usage within a cost-effective infrastructure while making sure capacity is available to meet your demanding workloads.

You can set triggers for scale-in and scale-out.

Scale In Automation

- Increase group sizes at specified times

- Increase group size on demand

- Manually increase group sizes

Scale Out Automation

- Reduce groups sizes at specified times

- Reduce group size on demand

- Manually reduce group size

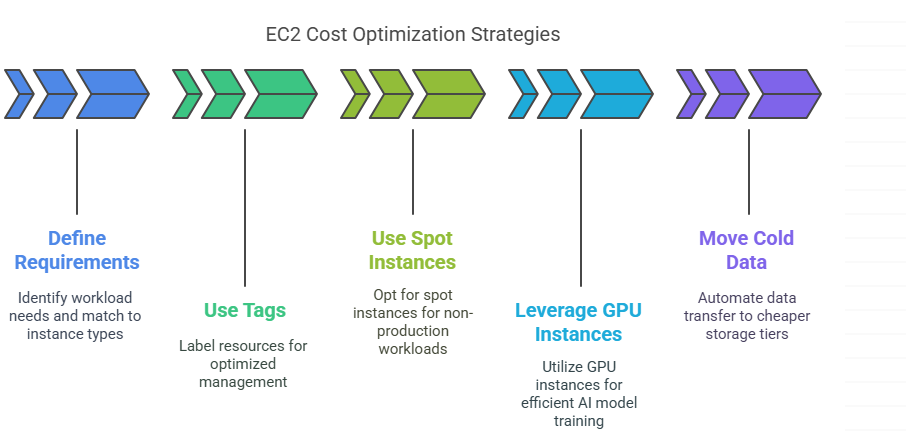

Five Strategies to Reduce EC2 Costs

- Define Requirements

The biggest item on most cloud bills is computing costs, so make sure you are only ordering what you will really need. Identify the needs for each workload and match them to targeted instance types. EC2 instances have different price/performance ratios, allowing you to achieve higher performance and lower costs for different workloads. - Use Tags to Target Cost Optimization

Tags can help you find and optimize specific EC2 instances in the AWS console and API. By labeling, you can control resources in groups to optimize production instances, non-production instances, and batch-processing jobs. - Use Spots Instances for Stateless, Non-production Workloads

For stateless and non-production workloads, opt for EC2 spot instances rather than regular instances. This can significantly reduce costs. - Leverage GPU Instances

Amazon’s GPU instances may cost more, but can be a faster, more efficient way to train machine learning, deep learning, and AI models. You will want to do a cost analysis to double-check, but you can often reduce overall costs because you will reduce total instance hours

. - Move Cold Data to Cheaper Storage Tiers

Automate the identification of data that is no longer needed in production and move it to lower-cost storage tiers, including S3 Infrequent Access, S3 Glacier, or S3 Deep Archive Glacier. [8]

Case Study

Sharethrough is a sustainable advertising platform providing supply-side services for publishers and content creators, serving 120 billion ad impressions and processing 275+ terabytes of data daily and 1.6 million queries per second.

The Challenge

With rapid growth, EC2 spending had grown substantially. Sharethrough wanted to lower costs without impacting performance.

The Results

Using Granulate’s continuous profiling solution and optimization agents, CPU utilization was improved by 26% in less than a week, leading to a 17% cost reduction without any additional engineering resources deployed.

Granulate optimization solutions

- 26% reduction in CPU utilization

- 17% reduction in EC2 costs

- 156% ROI

- 0 developer hours

After one week, we saw improvements in both CPU and throughput.Zhibek Elemanova, Sr. Operations Engineer, Sharethrough

Kubernetes (EKS) Optimization

Nearly two-thirds of organizations are using Kubernetes in production, citing benefits such as improved scalability, availability, and efficiency. [9] However, this movement also leads to higher expenses. 68% of companies surveyed reported an increase in costs; half noted costs rising more than 20%. [10]

Kubernetes also presents challenges in management, including a constantly changing environment, deployment complexity, and often siloed teams that have conflicting challenges.

Amazon Elastic Kubernetes Service (Amazon EKS) is a managed service that streamlines EKS integration with other services and streamlines deployment, management, and scaling of Kubernetes on AWS. [11] EKS automatically handles the provisioning of Kubernetes clusters.

However, overprovisioning node instances, failing to manage idle nodes and persistent volumes, and monitoring resource usage can drive up costs.

Four Strategies to Optimize EKS

- Right Size EC2 Instances

Evaluate your workloads and choose the lowest-cost instance that meets performance requirements. Monitoring resource utilization can reduce overprovisioning and help you right size your EC2 instance to better manage costs. - Use Fargate for Stateless Workloads

Limit EC2 instances to stateful workloads and deploy AWS Fargate for stateless workloads, such as containerized microservices apps, CDNs, print services, and other applications where past data or state does not need to be persistent. Fargate enables scaling underlying infrastructures without having to manage servers at a granular level. - Use Spot Instances

Implement spot instances for non-production workloads that are not mission-critical, such as Dev/Test environments. Spot instances are also a lower-cost solution for flexible workloads such as batch processing that can handle interruptions. - Enabling Autoscaling

Utilize EKS Autoscaling to automatically adjust the number of worker nodes based on CPU and memory utilization. This automatically scales workloads to run on the minimum number of nodes to handle the current load while stabilizing performance by adding nodes when demand increases and scaling down idle resources while not being consumed.

Case Study

ironSource is a software company that develops technology for app monetization and distribution for rapid, scalable growth throughout the entire app lifecycle. ironSource processes mass amounts of data, powering 87% of the top 100 gaming apps.

The Challenge

ironSource handles 25 billion daily ad requests with a 100-terabyte data pipeline and processes some 4 billion events daily. While wanting to reduce expenses, it could not come at the cost of latency due to real-time bidding success that is measured in milliseconds.

The Results

Using Granulate’s continuous optimization agent, ironSource was able to process 29% more throughput and reduce its cloud bill by 25% across the entire online ad stack within just two weeks of deployment.

Granulate Optimization Solutions

- 25% reduction in cloud costs

- 21% reduction in instances

- 29% increase in throughput

- 2 weeks to results

We saw a quick win in terms of cost reduction, with almost no R&D resources, when every other cost reduction approach required a lot of resources and disrupted the team from moving forward with the business plan.Gil Shoshan, Sr. Software Developer, ironSource

Big Data Optimization (EMR)

Processing Big Data for business intelligence (BI) and analytics comes with its own set of challenges. The mass amounts of data and diverse sources can strain compute resources. Processing requires large clusters, creating expenses for provisioning, maintaining, and storing data.

Amazon Elastic MapReduce (EMR) enables easier processing for Big Data using open-source frameworks such as Apache Spark, Hadoop, and Hive. [12]

Five Strategies to Optimize EMR

- Use AWS Spot Instances

Whenever possible, use AWS spot instances rather than on-demand to lower costs. Make sure to set maximum bid prices for spot instances lower than on-demand prices. While spot instances can be terminated if the spot market price exceeds your bid price for unused instances, you can automate pipelines to detect spot instance terminations and reschedule jobs on different clusters as needed. - Share Clusters

Share clusters across multiple small jobs rather than launching a separate cluster for every job. Whether your job takes 15 minutes or an hour, you’re paying for the 60 minutes. So, sharing one cluster to handle four 15-minute jobs can reduce costs significantly. Similar workloads with spikes at different times may also be good candidates for cluster sharing. - Use EMR Tags

Amazon EMR tags allow you to track the cost of cloud usage by project, job, or department. Tags automatically get applied to underlying EC2 instances to provide greater visibility and insights into detailed billing data to assess ROI. - Monitor Cluster Lifecycles

Deploy strategies to track clusters and manage them throughout their lifecycles. Amazon APIs can track cluster utilization and idle time, allowing you to automatically terminate idle clusters to reduce costs. You can also delete transient clusters as jobs complete. - Manage Cluster Resources

Choose optimal instances to match workload requirements and only allocate necessary core and task nodes to avoid overprovisioning. You can scale up or down cluster resources based on demand while minimizing your resources to reduce costs.

Case Study

Claroty is a cybersecurity company that protects critical infrastructure and automation controls from threat actors, serving the industrial, commercial, healthcare, and public sector.

The Challenge

Claroty runs most of its business logic on Kubernetes clusters and uses Apache Spark to handle, process, and analyze incoming data. These large-scale environments are constantly changing and require real-time AI processing working with multiple databases and distributed systems, leading to large-scale resource utilization and increasing cloud costs.

The Results

Using Granulate’s runtime optimization and capacity optimization solutions, Claroty realized performance improvements within two weeks. More efficient use of resources resulted in a 50% reduction in memory usage, 15% reduction in CPU utilization, 20% reduction in Big Data processing costs.

Granulate Optimization Solutions

- 50% reduction in memory usage

- 20% reduction in costs

- 18% reduction in node count

- 15% utilization improvement

Optimizing AWS With Granulate

Granulate streamlines AWS optimization to maximize performance and reduce costs. A SaaS delivery model requires no infrastructure to deploy, no code changes to your environment and works autonomously to optimize your AWS deployments.

Deploying Granulate is simple. Agentless and non-intrusive, you grant read-only access to your usage metrics and resource attributes. Granulates AI engine analyzes the data and works autonomously to optimize workloads and performance.

You get real-time visibility and deep insight to more easily identify ways to optimize instances, modify autoscaling parameters, and eliminate waste. Granulate not only identifies optimization solutions but also automatically applies them to your environment. By automatically finetuning instance types, bidding strategies, scaling parameters, and resource allocation, you get maximum efficiency at reduced costs.

With Granulate, optimization is ongoing. As your environment changes, Granulate provides continuous monitoring and takes proactive measures to improve performance and cost-efficiency over time.

With just a single command, AWS customers can integrate the Granulate solution, which works with AWS tools to identify and optimize results. Companies have saved millions of dollars and dramatically improved performance with Granulate. In many cases, AWS users can double application performance.

Granulate is an authorized AWS Partner and can help you achieve application-aware optimization in as little as one week to boost performance.

Within 2 weeks and without any customization whatsoever, we started to experience unbelievable performance improvement results that helped us achieve significant cost reduction.Ariel Assaraf, CEO, Coralogix

About Granulate, an Intel Company

Real-time, continuous application performance optimization, and capacity management for any type of workload, at any scale.

Granulate, an Intel company, empowers enterprises and digital native businesses with autonomous and continuous performance optimization, resulting in cloud and on-prem compute cost reduction. Available in the AWS, GCP, Microsoft Azure, and Red Hat marketplaces, the AI-driven technology operates on the runtime level to optimize workloads and capacity management without the need for code alterations.

Granulate offers a suite of optimization solutions, supporting containerized architecture, big data infrastructures, such as Spark, MapReduce, and Kafka, as well as resource management tools like Kubernetes and YARN. Granulate provides DevOps teams with optimization solutions for all major runtimes, such as Python, Java, Scala, and Go. Customers are seeing improvements in their job completion time, throughput, response time, and carbon footprint while realizing up to 45% cost savings.

Request a demo to see how Granulate can optimize your AWS performance and lower your compute costs.

References

[2] https://www.theregister.com/2023/07/18/aws_azure_cloud_market/

[3] https://www.cio.com/article/404314/94-of-enterprises-are-overspending-in-the-cloud-report.html

[5] https://www.aboutamazon.com/what-we-do/amazon-web-services

[6] https://www.computerweekly.com/feature/Cloud-egress-costs-What-they-are-and-how-to-dodge-them

[7] https://docs.aws.amazon.com/managedservices/latest/userguide/compute-optimizer.html

[8] https://aws.amazon.com/s3/storage-classes/

[9] https://www.cncf.io/reports/cncf-annual-survey-2022/

[11] https://aws.amazon.com/eks/

[12] https://aws.amazon.com/emr/

About the Author

Paul Dughi is the CEO at StrongerContent.com with more than 30 years of experience as a journalist, content strategist, and Emmy-award winning writer/producer. Paul has earned more than 30 regional and national awards for journalistic excellence and earned credentials in SEO, content marketing, and AI optimization from Google. HubSpot, Moz, Facebook, LinkedIn, SEMRush, eMarketing Institute, Local Media Association, the Interactive Advertising Bureau (IAB), and Vanderbilt University.